Last Updated on February 17, 2024 by KnownSense

Truly mastering something is so much more than just knowing the commands. Real experts, they know how stuff works under the hood. Well with that in mind, the plan for this article is to learn about the docker architecture and it’s internal working.

Docker is a traditional client server app. The client is basically the CLI or the command line, and then the server bits are what build and run the containers. Now, if you’re on a laptop, maybe with Docker Desktop or something, you’re going to have the client and the server on the same machine. In a production environment though, you’re a lot more likely to use the client stuff on your local laptop, but have it talk to the server stuff maybe in the cloud or somewhere else remote.

When you issue commands through the CLI, it them into the appropriate API calls and posts them to the Docker daemon. Then a bunch of hard work goes on behind the scenes, and out pops a container. That is the super high‑level architecture.

We write our apps in whatever our favorite languages are. Docker doesn’t care. We then use docker build to package them as images, docker push to upload them to a registry, and docker run to execute them on the server. Docker build creates OCI images, and docker run creates OCI containers, or that’s what we sometimes call them. But it begs the question, what’s the OCI? OCI is a standards body responsible for low‑level container‑related standards. Any images that conform to the OCI image spec, we call them OCI images, and the containers run from them are called OCI containers.

Any time we see the words image, Docker image, or OCI image, we mean the same thing, and the same goes for containers. So container, Docker container, and OCI container, they all mean the same thing as well. And you know what? Docker and Kubernetes both work with OCI images and OCI containers.

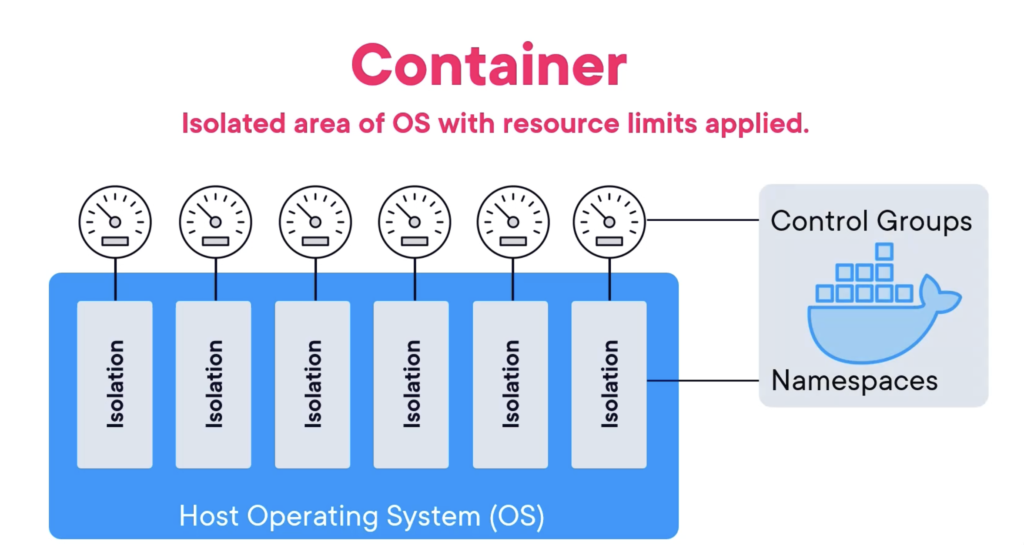

So, a container or an OCI container is just a construct that runs a process in an isolated environment. But to achieve that, we ring fence an area of an OS and we throw a bunch of limits on resource consumption. That is the fundamentals of an OCI container. Container is a fenced off area of an OS with a bunch of limits designed to run a process. Now, building containers requires a bunch of low‑level kernel primitives. However, the most important of these are namespaces and control groups.

So, it’s the namespaces that carve the OS into virtual OSes, and then it’s the control groups that impose the limits. Once all of that is constructed, we fork a process into it and it runs in isolation. Pictures make everything look simple. But these are proper low‑level kernel constructs. And out of the box, oh, they are a beast to work with. In fact, they’ve been around in the Linux kernel for absolutely ages. It’s just they were so hard to use. Nobody really bothered. Well, that was until Docker came along, and this really was Docker’s superpower. It made all that really hard kernel stuff easy to use, so it made containers easy.

Docker engine is a bit like a car engine. It’s really complex and it’s made of loads of smaller parts, but we consume it via simplified controls. So on a car, we use a relatively simple steering wheel and some foot pedals. But behind the scenes, oh my goodness, there’s all kinds of stuff going on, like, of course, internal combustion. There’s belts, gears, diffs, exhaust systems, you name it. Well, it’s kind of similar for Docker. We use the simple CLI, but under the covers, there is a whole bunch of moving parts. We use the CLI part of the Docker client to request a new container. The client then converts that command into the appropriate API request to the engine here, like maybe create me a container. Then the engine, that pulls together all the hard kernel stuff. And then like we said before, out pops a container, and it is honestly a beautiful thing. Dead simple command, out pops a container with all the hideous machinery below the hood all hidden away.

Kernel Internals

Right then, first things first. Docker, and I suppose modern containers, absolutely started on Linux. And you know what? It is fair to say it is where most of the action still is. However, both Docker and Microsoft put a lot of work into bringing containers to Windows. Now we’ll get into this a bit later. But for now, the Docker experience is pretty much identical, whether your Windows or Linux. The commands are the same, the workflow is the same, you name it. Docker looks and feels the same, whether your Windows or Linux or even Mac. But you know what? That’s the UX or the user experience. The stuff under the hood, like how the two kernels build containers, that’s obviously going to be different.

In this article we will be taking Linux as base reference. If you’re a Windows person though, don’t stress. The concepts at least are similar enough.

Linux containers have been around for absolutely ages or at least the kernel stuff to build them has. It’s just, like we said before, it was always too hard. You pretty much had to be a kernel engineer just to get them working. The point is though the stuff in the kernel to build containers has been around since forever, like way before Docker.

The two main kernel primitives for building containers are namespaces (all about isolation) and control groups(all about limits). Yes, they are Linux kernel primitives. But there’s rough equivalent in all modern Windows kernels.

Namespace

These let us take an operating system and carve it into multiple isolated virtual operating systems, a bit like hypervisors and virtual machines.

In the hypervisor world, we take a single physical machine with all of its resources like CPU and memory and all that kind of jazz, and we carve it into multiple virtual machines that each have their own slice of virtual CPU, virtual memory, virtual networking, virtual storage, the whole lot.

In the container world, we use namespaces to take a single operating system with all of its resources, which are higher‑level constructs like file systems and process trees and users and stuff. We use namespaces to carve all of that into multiple virtual operating systems called containers. So, each container gets its own virtual root file system, its own process tree, eth0 interface, its own route user, the full set.

Then just the same way that VMs look, smell, and feel exactly like physical machines, containers look, smell, and feel exactly like regular operating systems, only, of course, they’re not.

All three container in above image are sharing a single kernel on the host, but everything’s isolated. So, even though they’re on the same host, processes in one absolutely cannot see processes in the others.

As shown in above image, we’ve got different namespaces in the Linux world, and it’s going to be roughly analogous in the Windows world. An OCI container is basically an organized collection of these. So, the container here is an isolated collection of all of these namespaces. So it’s got its own process ID with a PID1, its own network namespace with an eth0 interface and IP address. It’s got its own root file system. Obviously, we can create more containers but each one’s isolated, and each one is looking and feeling just like a regular OS. In fact, apps running in any of these, they’ve got no idea they’re in a container. As far as they’re concerned, it’s just an OS. The PID namespace gives each container its own isolated process tree, complete with its very own PID1, meaning processes in one container, like we said, they are blissfully unaware of processes in others. The net namespace gives each container its own isolated network stack, so its own NIC, its own IP, routing table, all of that jazz. Mount gives each container its own isolated root file system. That will be the C drive on Windows. IPC lets processes in the same container access the same shared memory. UTS gives every container its own hostname. And the user namespace lets you map user accounts inside of a container to different users on the shared host. The typical use case here is mapping the container’s root user to a non privileged user on the host.

Control Groups

But like any multi‑tenant system, there’s always the fear of noisy neighbors. We need something to police the consumption of system resources, which is where control groups come in. C group let us say, any container here is getting this amount of CPU and then this amount of memory and maybe this amount of disk I/O. And of course, it’s the same for all containers.

Well, namespaces and C groups, these are the foundation of production‑worthy OCI containers, we also need union file system or some way of combining a bunch of read‑only file systems or block devices, lashing a writeable layer on top, and presenting them to the container as a unified view. So, take these three and we have pretty much got modern containers. And you know what? That’s precisely what Docker did. It came along and made all of this really hard stuff really easy. Modern containers leverage stuff like capabilities and set comp and a bunch of other stuff to add security and the likes. But these three, these are the foundation. Time to switch tack now and look at what Docker brought to the party.

Docker

At the very highest level, Docker hides all the hard kernel stuff, and it gives us a powerful CLI and API that make containers easy. For Docker, I think it’s good to think of it as two pieces for now, the client and the engine. Sometimes we call the engine the daemon or just Docker.

You can read a bit of the history of Docker because, as is always the case with history, it really helps us understand why things are the way they are now.

Docker’s engine operates via the Docker client, daemon, containerd, and runc, orchestrating container creation through a series of interactions. The CLI commands trigger actions in the daemon, which delegates to containerd and subsequently to runc at the OCI layer for container instantiation. This intricate process ensures modularity, with components like containerd and runc being independently reusable. For an in-depth understanding of Docker’s mechanisms and its componentized architecture, explore further details on this dedicated page.

Conclusion

Understanding Docker goes beyond commands; it’s about grasping its internal mechanisms. Docker’s architecture involves the CLI and server (daemon), working together whether on a local machine or remotely. CLI commands trigger API calls to the daemon, orchestrating container creation via containerd and runc. Docker simplifies app development, utilizing OCI standards for images and containers, which rely on kernel primitives like namespaces and control groups. Docker’s magic lies in simplifying complex kernel functions, offering a user-friendly CLI.